Dr. Scott Zoldi – Chief Analytics Officer, FICO

There is no doubt that a consensus is forming that companies need strong processes to ensure responsible and ethical AI use. You just need to flick through the latest headlines that report on how AI has created bias over time. For example, the underrepresentation of women in medical research data has affected the effectiveness of medical treatments on women, as reported by Caroline Criado Perez in Invisible Women, her renowned book on data bias.

Dr. Scott Zoldi, Chief Analytics Officer, FICO

A new report from FICO and market intelligence firm Corinium, The State of Responsible AI, finds that most companies may be deploying AI at significant risk, stemming primarily from undetected instances of bias. The reputational risk and harm to consumers is considerable and is the reason why businesses need rigorous processes to find, spot and remove bias across the model lifecycle.

The report, the second annual survey of Chief Analytics, Chief AI and Chief Data Officers, examines how global organisations are applying artificial intelligence technology to business challenges, and how responsibly they are doing so. In addition to a troubling widespread inability to explain how AI model decisions are made, the study found that 39% of board members and 33% of executive teams have an incomplete understanding of AI ethics.

Data is always biased; the key is to ensure that bias doesn’t infect AI models

Combating AI model bias is an area that many enterprises haven’t fully defined or operationalised effectively; in-fact 80% of AI-focused executives are struggling to establish processes that ensure responsible AI use.

One in three firms have a model validation team to assess newly developed models and only 38% say they have data bias mitigation steps built into model development processes. In fact, securing the resources to ensure AI models are developed responsibly remains an issue for many. Just 54% cent of respondents said they are able to do this relatively easily, while 46% said it’s a challenge.

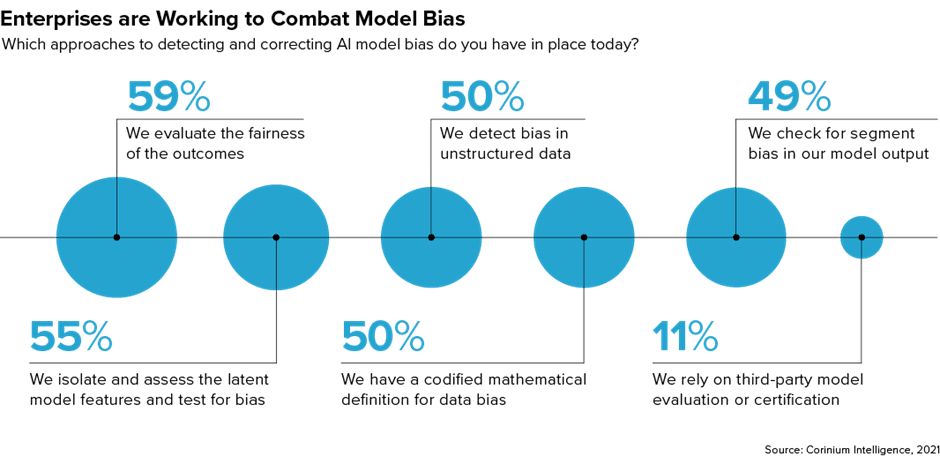

As such, it looks like few enterprises have an ‘ethics by design’ approach in place that would ensure they routinely test for and correct AI bias issues during their development processes. The research shows that enterprises are using a range of approaches to root out causes of AI bias during the model development process. However, it also suggests that few organisations have a comprehensive standard of checks and balances in place.

Evaluating the fairness of model outcomes is the most popular safeguard in the business community today, with 59% of respondents saying they do this to detect model bias. Half say they have a codified mathematical definition for data bias.

Governance critical

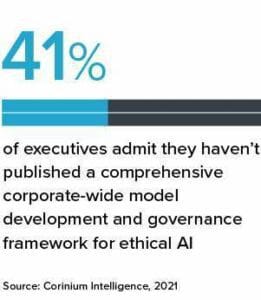

To drive the responsible use of AI in organisations, senior leadership and boards must understand and enforce auditable, immutable AI model governance. They need to establish governance frameworks to monitor AI models to ensure the decisions they produce are accountable, fair, transparent, and responsible.

Executive teams and Boards of Directors will not succeed with a ‘do no evil’ mantra without a model governance enforcement guidebook and corporate processes to monitor AI in production. In their capacity, AI leaders need to establish standards for their firms where none exist today and promote active monitoring.

Without a written formal governance process, both individuals and organisations are doomed to repeat AI ethics mistakes. The Corinium report identified that just 22% of respondents said their enterprise has an AI ethics board to consider questions on AI ethics and fairness. And there was a clear acknowledgement that some business stakeholders view considerations around AI ethics as a drag on the pace of innovation. In fact, 62% of survey respondents said they find it challenging to balance the need to be responsible with the need to bring new innovations to market quickly in their organisations.

This challenge goes all the way to the top of the modern enterprise, with 78% of respondents saying they find it challenging to secure executive support for prioritising AI ethics and responsible AI practices. Educating key stakeholder groups about the risks associated with AI as well as the importance of complying with AI regulation are two critical steps to addressing companies’ blind spots around responsible AI.

The business community is committed to driving transformation through AI-powered automation. However, senior leaders and boards need to be aware of the risks associated with the technology and the best practices to proactively monitor, audit, and mitigate them.

AI has the power to transform the world, but as the popular saying goes – with great power, comes great responsibility.

A complete copy of the FICO sponsored report, State of Responsible AI, can be downloaded here

About the author:

Dr. Scott Zoldi is chief analytics officer at FICO responsible for the analytic development of FICO’s product and technology solutions. While at FICO, Scott has been responsible for authoring more than 100 analytic patents, with 65 granted and 45 pending. Scott serves on two boards of directors, Software San Diego and Cyber Center of Excellence. Scott received his Ph.D. in theoretical and computational physics from Duke University. He blogs at www.fico.com/blogs/author/scott-zoldi.